The Rise of the AI-Supported Generalist.

The role of a developer has always been expanding. Once, being full-stack meant juggling frontend and backend. Later, it meant cloud deployment, APIs, and maybe even DevOps pipelines. But if we zoom out, building a product involves far more than code. It spans client outreach, design, testing, documentation, analytics, and continuous strategy.

That’s why I believe the next evolution is full-cycle. It’s about owning the entire arc of product creation, from the first client conversation to data-driven iteration after launch. These things can be supported at every step by AI and modern tooling.

The Full-Cycle Stack

Client Acquisition & Outreach

The product journey starts before code is written. Traditionally, this step required a sales or marketing team. Today, AI makes client acquisition more accessible.

- How AI Helps: Drafting proposals, creating pitch decks, personalizing outreach emails, and even analyzing leads.

- Notable Tools: ChatGPT or Claude for proposal drafts, Jasper or Copy.ai for polished marketing copy, Notion AI to track CRM notes, and Apollo.io for lead generation.

Research & Discovery

Before building, you need to deeply understand the client’s needs, the market, and the tech landscape.

- How AI Helps: NotebookLM and Perplexity transform raw documents, whitepapers, or competitor sites into a living, queryable knowledge base. Instead of siloed notes, you get an interactive research layer.

- Tools: NotebookLM, Obsidian, Perplexity (domain research with citations).

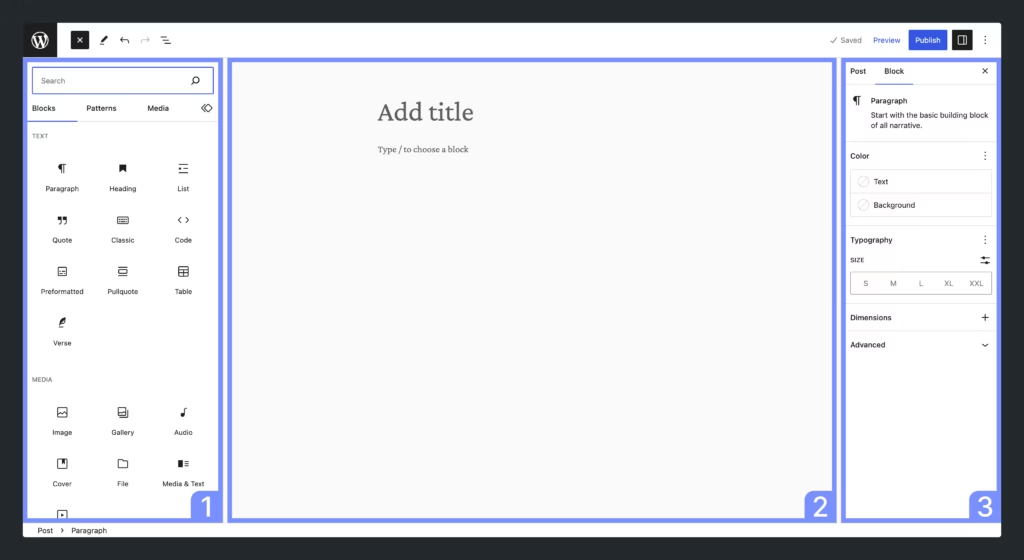

Design & UX

A good product isn’t just functionally correct, it’s intuitive and accessible. AI now accelerates prototyping and validation.

- How AI Helps: Wireframing from sketches, generating UI variations, and running accessibility checks early. Designers can iterate faster without losing creative control.

- Tools: Figma + Magician AI or Genius (AI-assisted design plugins), Uizard (turn sketches into prototypes), Stark (accessibility audits), Midjourney can even help gather mood boards and ideas.

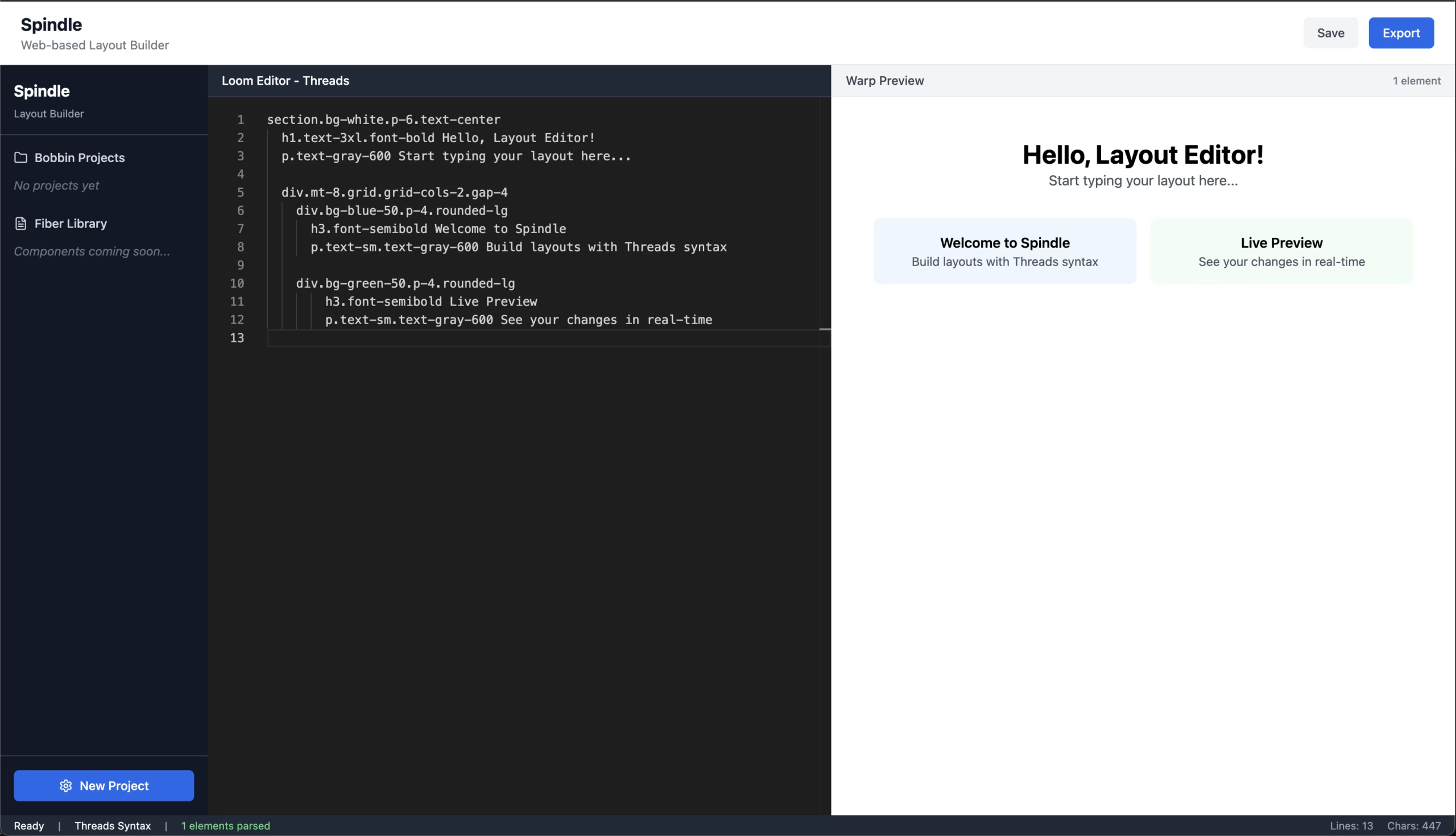

Backend & Frontend Development

The core of full-stack development is still here but AI makes it easier to move across languages and frameworks.

- How AI Helps: Cursor and GitHub Copilot function like context-aware pair programmers. They scaffold, explain, and optimize code, reducing time spent debugging syntax trivia.

- Tools: Cursor (AI-first IDE), GitHub Copilot (inline coding assistant), Replit Ghostwriter (rapid prototyping), LangChain / Vercel AI SDK (building AI features into apps).

DevOps & Deployment

Getting a product live used to require an ops specialist, or some very carefully built pipelines. With AI, developers can manage infra confidently.

- How AI Helps: Generate Dockerfiles, CI/CD pipelines, and infrastructure-as-code templates while explaining trade-offs. AI copilots also surface deployment issues quickly.

- Tools: GitHub Actions + Copilot (pipeline scaffolding), Terraform + Pulumi AI (infra-as-code with AI support), Railway / Render (AI-friendly deployment platforms).

QA & Testing

Testing is often under-prioritized, but AI removes friction here.

- How AI Helps: Generate unit and integration tests automatically, simulate edge cases, and provide regression coverage. QA becomes proactive instead of reactive.

- Tools: CodiumAI / TestGPT (test generation), Cypress tests can be assisted with Cursor/Github Copilot, SonarLint (code quality + static analysis).

Documentation (Automated & Continuous)

Documentation is the lifeblood of maintainable projects and one of the easiest to let slide.

- How AI Helps: Generate developer docs, API references, onboarding guides, and changelogs directly from code and commits. Instead of being a separate chore, documentation evolves alongside the codebase.

- Tools: Mintlify (AI-generated docs from code), Swimm (interactive walkthroughs), Docusaurus + GPT pipelines (custom doc sites), ReadMe AI (dynamic API docs).

Maintenance & Support

After launch, the product still requires care.

- How AI Helps: Triage bug reports, summarize issue threads, draft support responses, and provide self-updating FAQs. The support burden is reduced without losing the human touch.

- Tools: Intercom Fin AI (automated customer responses), Zendesk + AI Assist (support automation), Linear AI summaries (for bug/issue triage).

Continuous Strategy

A product isn’t finished at launch, it’s evolving. I’ve always believed a site is an iterative process and should constantly be improved.

Strategy needs to adapt in real time.

- How AI Helps: Model scenarios, forecast usage, and create roadmaps informed by real-time data and simulations. Instead of quarterly updates, strategy becomes continuous.

- Tools: Causal (financial + strategic modeling), Amplitude + AI insights (product strategy analytics), Miro AI (collaborative roadmapping).

Data Analysis & Iteration

Iteration closes the cycle. Data-driven decisions are how products grow, but traditionally this meant waiting on a BI team.

- How AI Helps: Analyze product metrics directly, run exploratory data analysis, and simulate A/B test outcomes. AI enables developers to act as data analyst generalists.

- Tools: ChatGPT Code Interpreter (EDA + visualization), Hex (data notebooks), Mixpanel + AI insights (usage funnels), Google Analytics Intelligence (natural-language queries).

The AI-Supported Generalist

The full-cycle engineer isn’t doing everything alone, they’re leveraging AI to expand their reach. Specialists will always matter, but the AI-supported generalist can bridge gaps, maintain momentum, and keep projects moving forward across the entire lifecycle.

This role is less about replacing people and more about collapsing silos. AI gives us the leverage to handle research, strategy, design, code, QA, documentation, and data as one continuous loop.

Why Full-Cycle Matters

“Full-stack” defined an era where developers expanded vertically across code layers.

“Full-cycle” defines the era where we expand horizontally across the product lifecycle.

From first outreach to post-launch iteration, the cycle never stops. And with AI, the engineer who can flex across it isn’t overwhelmed, they’re supported, accelerated, and amplified.

The future isn’t just full-stack.

The future is full-cycle.